Forget what you’ve seen in the movies – rapid advancements in computer vision, machine learning, computing power, robotics and artificial intelligence are eagerly sought out by militaries around the world to automate kill-decisions. The inherent dual use of most of these technologies and heavy investments in the field demand new international law to address autonomy in weapons systems and to ensure meaningful human control in the use of force.

Stop Killer Robots actively seeks out technology workers and companies as allies to achieve our common goal of a new treaty to regulate autonomy in weapons systems. The private sector will play a key role in preventing the development of these weapons.

FAQ's

A treaty addressing autonomy in weapon systems would not stifle innovation, but rather help ensure that the research and development of artificial intelligence continues unhindered. Biologists have not found that the Biological Weapons Convention has hurt their research and developments, nor do chemists complain that the Chemical Weapons Convention has negatively impacted their work.

In fact, if the technology to build autonomous weapons is permitted to develop without regulation, many artificial intelligence experts, roboticists, and technology workers fear that positive applications of artificial intelligence will suffer. Any malfunctions, mistakes or war crimes committed by autonomous weapons systems would receive negative publicity, resulting in public pushback on the trend to develop artificial intelligence and other emerging technologies.

Stop Killer Robots is a single-issue coalition focused on securing a treaty to ensure meaningful human control over the use of force and prohibit the targeting of people by autonomous weapons. Our coalition of non-governmental organisations often question specific military projects that could pave the way for weapons systems with concerning levels of autonomy, but it does not ask or advocate for companies not to work with specific militaries or governments.

We advise technologists to consider the partnerships, customers, and investors they work with, and think critically about the consequential outcomes of any high-risk business relationships that they enter into.

Stop Killer Robots is not anti-technology. We do not oppose military or policing applications of artificial intelligence and emerging technologies in general. As a human-centred campaign, we believe a new international treaty to regulate autonomous weapons would bring many benefits for humanity. New laws would help to clarify the role of human decision-making related to weapons and the use of force in warfare, policing and other circumstances.

The current development of AI and emerging technologies is outpacing policymakers’ ability to regulate. Technology companies and workers must commit not to contribute to the development of autonomous weapons that target people and cannot or are not able to be operate under meaningful human control.

Many technologies under development are “dual-use”, meaning they can be employed in various scenarios (civilian, military, policing, etc). Therefore, it is crucial that the tech sector remain vigilant and always consider the anticipated or unanticipated end-use.

What can the tech sector do?

Companies:

- Publicly endorse the call to ensure meaningful human control over the use of force and regulate autonomy in weapons systems.

- Make a public commitment not to contribute to the development of autonomous weapons.

- Incorporate this position into existing principles, policy documents, and legal contracts such as acceptable use policies or terms of service.

- Ensure employees are well informed about how their work will be used, and establish internal processes to encourage open discussions on any concerns without fear of retaliation.

Individuals:

- Support the call to ensure meaningful human control over the use of force and regulate autonomy in weapons systems.

- Hold your company, industry, and peers accountable for the research they undertake and the customers they work with.

- Methodically undertake risk assessments to assess unintended uses, consequences or risks associates with the technology you develop.

- Join the International Committee for Robot Arms Control, a co-founder of our campaign at www.icrac.net

Join Our Community

Meet some of our tech sector champions

Laura Nolan

Laura Nolan

Laura Nolan has been a software engineer in industry for over 15 years. She was one of the (many) signatories of the “cancel Maven” open letter, which called for Google to cancel its involvement in the US DoD’s project to use artificial intelligence technology to analyse drone surveillance footage. She campaigned within Google against Project Maven, before leaving the company in protest against it. In 2018 Laura also founded TechWontBuildIt Dublin, an organisation for technology workers who are concerned about the ethical implications of our industry and the work we do.

Laura holds an MSc in Advanced Software Engineering from University College and a BA(Mod) in Computer Science from Trinity College Dublin, where she was elected a Trinity Scholar.

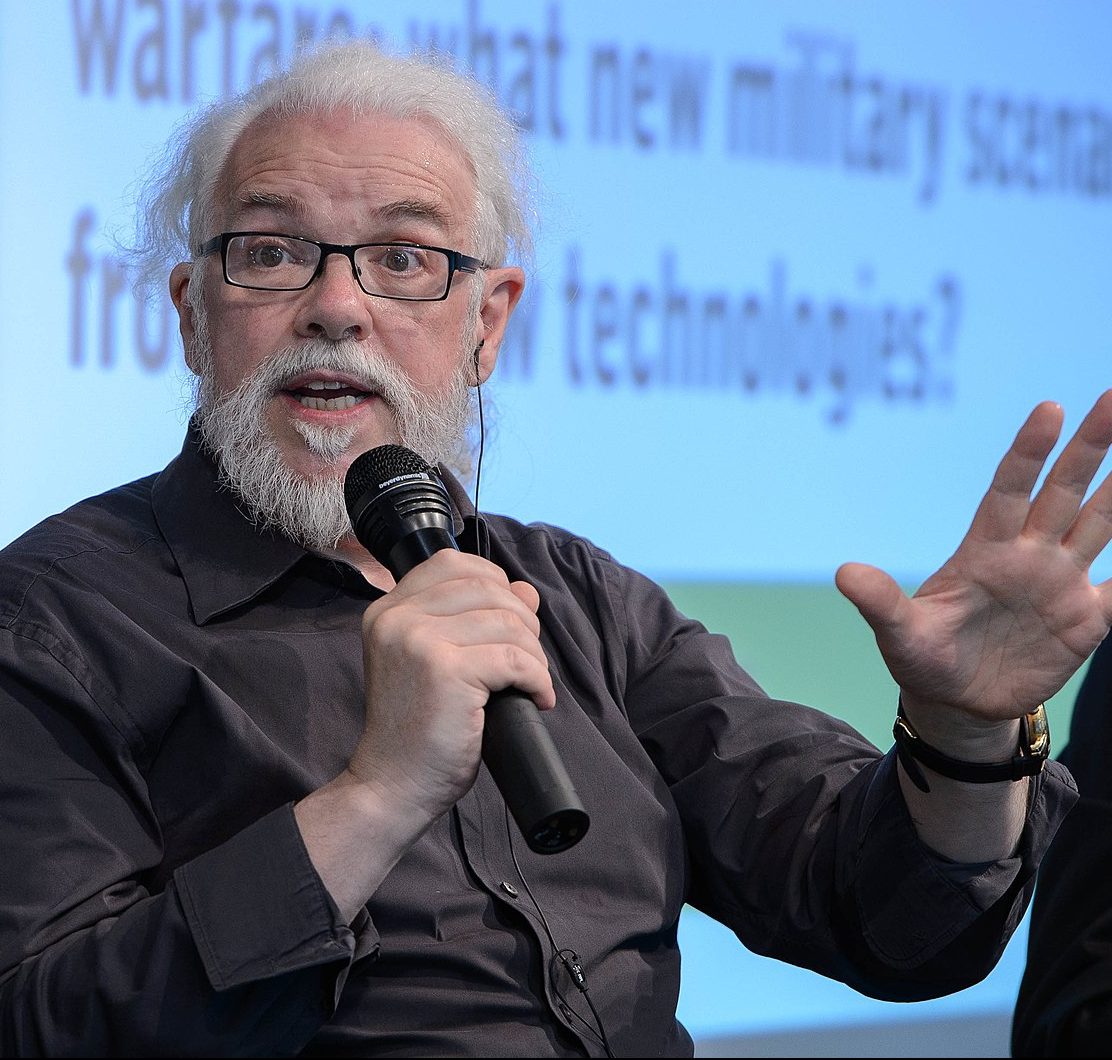

Noel Sharkey

Noel Sharkey

Noel Sharkey PhD, DSc FIET, FBCS, CITP, FRIN is an Emeritus Professor of Robotics and Artificial Intelligence, University of Sheffield, UK. His previous University jobs were at Yale AI labs, Stanford Psychology Department, Essex Language and Linguistics and Exeter Computer Science. He is a chartered Electrical Engineer, a member of the experimental psychology society and Equity, the actors union.

Noel chairs the International Committee for Robot Arms Control, is director of the Foundation for Responsible Robotics and a member of the Campaign to Stop Killer Robots Steering Committee. He writs for National Newspapers and is best known to the public from popular BBC TV shows like ‘Robot Wars’.

Photo: Heinrich-Böll-Stiftung

A comprehensive treaty is possible

More than 80 countries, including Israel, China, Russia, South Korea, the United Kingdom and the United States, are actively participating in UN talks on how to handle the concerns raised by autonomous weapon systems. After years of discussion, additional pressure from the technology industry is needed to launch formal negotiations on an international treaty to address autonomy in weapons systems and ensure meaningful human control over the use of force.

Policymakers need the world’s brightest minds and researchers at the cutting-edge of AI breakthroughs to prevent harm from the use of AI in warfare and policing.

Endorse the call to stop killer robots.