From smart homes and targeted advertising to the use of robot dogs by police enforcement, artificial intelligence technologies and automated decision-making are now playing a significant role in our lives. Technology can be amazing. But just because we can build something, it doesn’t mean we should. Many technologies with varying degrees of autonomy are already being widely rolled out without pausing to consider the consequences of normalising their use. Why do we need to talk about this?

Because machines don’t see us as people, just another piece of code to be processed and sorted.

Digital Dehumanisation: when machines decide, not people.

Digital Dehumanisation: when machines decide, not people.

The technologies we’re worried about reduce living people to data points. Our complex identities, our physical features and our patterns of behaviour are analysed, pattern-matched and sorted into profiles, with decisions about us made by machines according to which pre-programmed profile we fit into.

This has very real consequences for historically marginalised or vulnerable communities. That’s because technology often cements – rather than challenges – already existing biases about who people are. Stereotypes are entrenched by automated decision-making.

What has this got to do with killer robots?

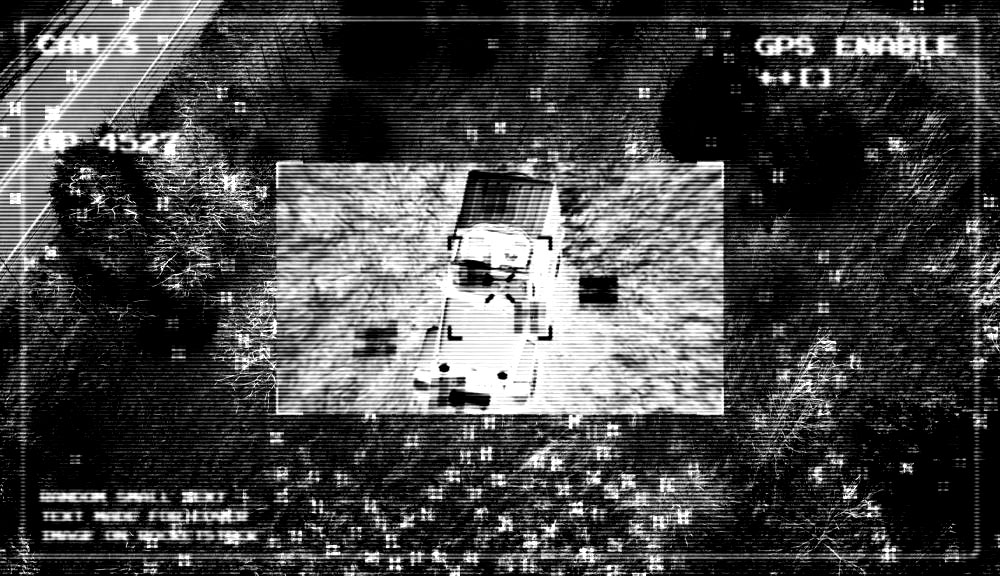

At the most extreme end of the spectrum of increasing automation lie killer robots.

Advances in technology now allow weapons systems to select and attack targets autonomously. This means that in the use of force, we have less human control over what is happening and why. It means we are closer to machines making decisions over who to kill or what to destroy. And for machines there is no difference between a ‘who’ and a ‘what’.

The technologies that will be used in autonomous weapons will reduce people to data and subject them to attack - the ultimate form of digital dehumanisation.

Killer robots are currently being developed for use in armed conflict, which will have devastating consequences. But with the increasing automation of our society, killer robots won’t just be limited to the battlefield. There is no doubt that they will also be used in border control, policing and other areas of society where meaningful human control should be most present.

We can act now to draw a legal and moral line. We all need to take responsibility for the development and use of technology, and for the role it plays in our lives. Killer robots don’t just appear – we create them. And we have an opportunity to act now to stop killer robots, before the technological developments have gone too far.

Less autonomy. More humanity.

So, when it comes to digital dehumanisation - to weapons systems that will target people - we need less autonomy and more humanity.

Killer robots, human rights and digital dehumanisation