How we created a virtual human

(and got AI to interview itself on AI, Autonomy and Digital Dehumanisation)

An article by Stu Mason – Creative Director Masonry Cartel for Stop Killer Robots

“WE SHOULD HAVE A MONTH WHERE EVERYTHING IS MADE AND PRESENTED BY AI?!?” I excitedly proclaimed. “Show how dehumanising some of these technologies can be, first-hand!”

And silence ensued…

It was an early meeting about the SKR Digital Dehumanisation campaign, and once again, I was over-excited by one of those ideas that just appeared in my mouth, seemingly out of nowhere.

In fairness, it sounded ridiculous. Having access to an AI and then having it create engaging, accurate and importantly, factual content – that was the sort of thinking that was decades away.

But…

and it was only a small but at that stage… there was something about it. And it lodged somewhere in the recesses of my brain.

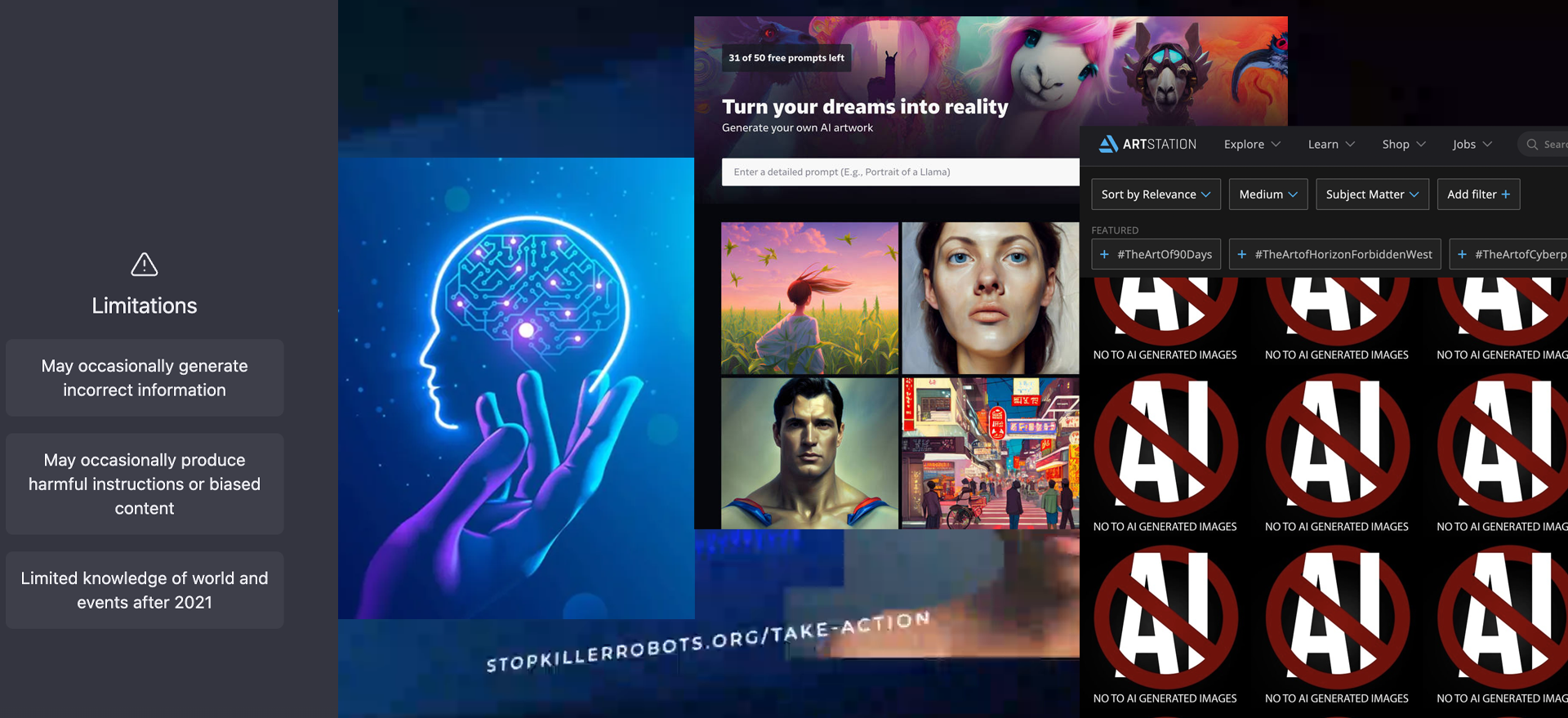

Time passed and the more content we started planning, the more I was seeing and learning about OpenAI. The art world was up in arms about AI generated ‘art’ and my creative friends were increasingly worried about their future. There wasn’t a question asked online that wasn’t answered by someone who had copied and pasted a response from Chat GPT.

And so, alongside all our research and planning on Digital Dehumanisation, I started tinkering…

I was curious about the content Chat GPT presents, where it comes from and how it’s modified/translated through OpenAI.

You see, the thing with information about the Stop Killer Robots campaign is that it’s VERY specific. Language has to be used in a specific way, information presented as such that it addresses the nuances and specificities of the subject. There may appear to be little difference between ‘autonomous weapons’ and ‘fully autonomous weapons’ for example, but the addition of ‘fully’ is potentially an open invite for lawmakers to wave through any number of atrocities in the name of progression and defence. It also causes no end of arguments in the YouTube comments section too! Language this specific matters. A lot.

I’ve been working with the campaign since early 2021 and written my fair share of content on the subject – after a while you begin to notice patterns. Syntax, structure, and cadence all give clues not only to who wrote the content, but also to when.

And it’s this specificity that led me to the realisation that the content delivered by Chat GPT isn’t AI generated. It’s AI modified.

And that’s a BIG difference.

It’s not AI presenting its own thoughts, ideas, and concepts. It’s AI presenting our human ideas, thoughts, and concepts back at us (albeit with enough of a change to avoid direct litigation or copyright infringement claims). It’s not true AI, intelligent and aware, it’s marketing. Machine Learning branded as AI.

And I noticed that asking Chat GPT questions on autonomous weapons and the Stop Killer Robots Campaign would result in our own content being replayed back to us – with enough of a tweak for it to not be a direct reproduction, but close enough that those who create this content would recognise it. Now for us, that’s no bad thing – the more our content gets out into the world, and the more people who are aware of these issues, the better. (Worth noting though that if we were writers and sustained life through our craft, that would be a wholly different matter though – and a quite terrifying prospect).

If then that AI generated content is based on ours, we know it’s reasonably accurate. The reason the accuracy of the content is important is that as the leading authority on Autonomous Weapons, everything we share must be accurate. It must stand up to investigation and it must be as accessible as possible.

What it meant in this instance though, was that the seemingly daft idea of having AI generate content on AI, might actually be possible. Under supervision. There had already been too many instances of automation and Chat GPT going awry to let it loose on our content solo.

Alongside this, our research into Digital Dehumanisation had me looking at ways in which humans can be affected by AI and autonomy – specifically where technology removes or replaces human involvement or responsibility through the use of autonomy. We’re not scaremongering – this is really happening.

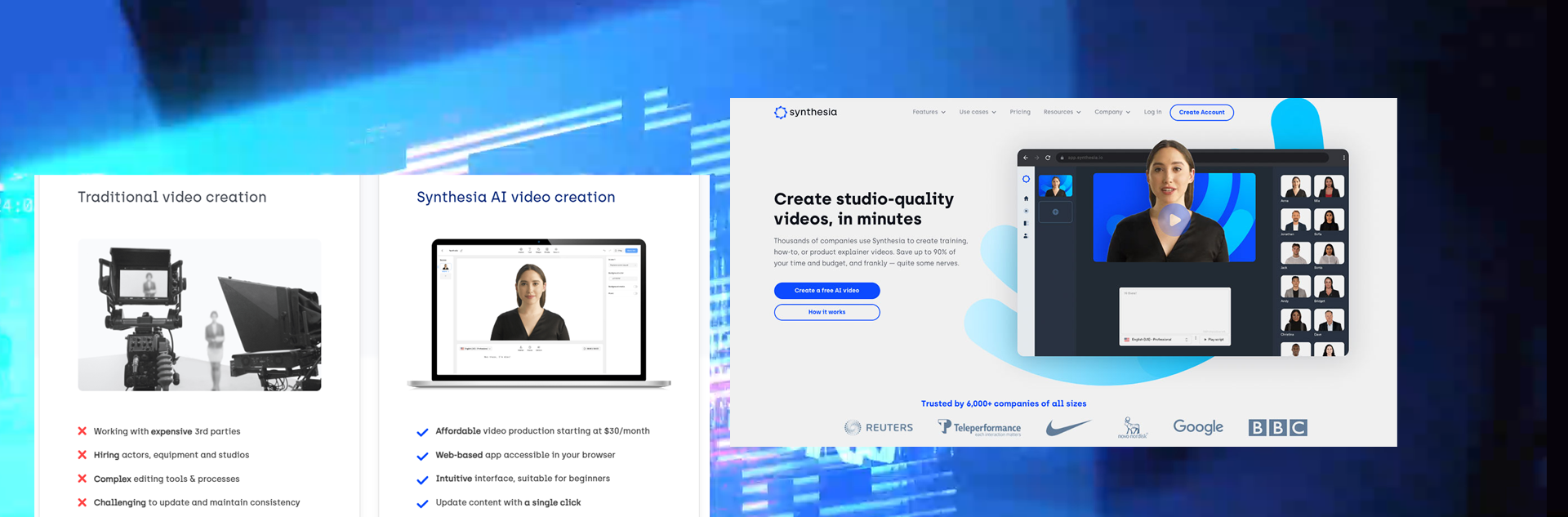

Before long I discovered Synthesia – an AI video creation platform. Thousands of companies use it to create training videos, automatically in 120 languages – saving up to 80% of their time and budget, according to Synthesia’s marketing. Those savings come by removing the need for humans. Their homepage even has a helpful chart illustrating the negatives associated with video creation the ‘traditional way’, complete with lots of red crosses alongside to demonstrate the negatives of human involvement. Digital Dehumanisation driven by demand for increased profit margin.

The thought then struck me – what if I could use accessible technologies such as Chat GPT to generate questions to ask an AI on Autonomy and Digital Dehumanisation. Then get it to answer those questions itself. I could then feed the resulting text output into Synthesia’s control panel, and repurposing its text-to-video function, have a digital analogue of a human seemingly respond to camera – to show the dehumanising effects of these technologies directly – to interview AI, on AI, using AI.

Fundamentally it wasn’t difficult; ask the questions, copy and paste the answers into Synthesia, have that generate the content… but it really isn’t that straightforward in reality. This isn’t true AI. It’s an extension of the technology we’ve seen come before it. It is super impressive and does create an impact. It’s even, on the face of it, really quite disturbing when you find yourself looking down the barrel of what appears to be your own obsolescence. But it IS flawed. It’s not as simple as the marketing professes it to be and so to make a realistic, believable human analogue, I had to spend days editing the Chat GPT transcript to trick Synthesia’s engine to be able to correctly pronounce the language used. Digital Dehumanisation for example will now and forever be spelled ‘dee-hew-man_ice-ation’ in my head. I can’t tell you how many variants of that I had to try to get it accurate – and even then, in the final output, there’s still some giveaways as to those efforts.

As for assigning our digital avatar/virtual human/synthetic actor a name, Chat GPT had to be submitted to a range of questioning before we got a usable response. With the recent update, it will allow you to assign a name ‘for conversational purposes’ but at the time, I ended up having to get it to make both fore and surname from an amalgam of Open AI’s inventors (interestingly since the update, Chat CPT is now very adamant that Elon Musk plays no part in Open AI – it tells you as much – even if you didn’t ask. Interesting!). The name Giles Zamboski hasn’t been suggested since the update, even when I’ve asked given the same prompt multiple times.

The way Giles appears is also very deliberate. We wanted to avoid suggestion of inferring race or gender outside of my own (there’s been a lot about ‘virtual blackface’ in the media of late which I would really encourage you to read) but at the same time, be suggestive of a young innovative ‘tech bro’. A lot of the ‘virtual actors’ available on Sythesia, when coupled with the suggested voices, are really very obviously computer generated though. To illustrate the point we were making and in crafting ‘Giles’ to appear as human-like as possible, our options were limited.

It won’t be long before we start seeing tools that allow you to input content prompts and the output is full-fledged video content at a highly realistic level – YouTube video content on demand. Tailored to you, and based on the two decades worth of content we’ve been feeding the internet with. Our content. Human content. Not AI’s. But right now, you have to work at it and manipulate the system to get the outcome you need. It’s all VERY advanced, and it IS accessible, but in the immortal words of Public Enemy; ‘Don’t believe the hype’.

These technologies have the capacity to really improve things for humanity and the innovations that come as a result will undoubtedly be incredible, but when their use is driven by finance, commerce, industry and the military, that will ALWAYS be a secondary consideration.

And for what it’s worth, that’s really the fundamental point to my own concerns over autonomous weapons – up the ante and you up the potential for problems – if advanced technology is STILL so prone to errors that we need to modify our behaviour to be able to work effectively with it, then how and importantly, why are we seeing such an increasing trend toward arming it?!? Toward allowing technology to make decisions over who lives, and who dies. In my mind it’s marketing gone haywire, on a (military) industrial scale and frankly, nobody in marketing should ever be allowed that sort of power. EVER.