جوجل، والشركات الأخرى يجب أن تؤيد الحظر

Google and its parent company Alphabet are starting to address some ethical concerns raised by the development of artificial intelligence (AI) and machine learning, but, as yet, have not taken a position on the unchecked use of autonomy and AI in weapon systems. These and other technology companies such as Amazon, Microsoft, and Oracle, should publicly endorse the call to ban fully autonomous weapons and commit to never help develop these weapons. Doing so would support the rapidly-expanding international effort to ensure the decision to take human life is never delegated to a machine in warfare or in policing and other circumstances.

In recent months, calls have mounted for Google to commit to never to help create weapon systems that would select and attack targets without meaningful human control. Last month, more than four thousand Google employees issued an open letter demanding the company adopt a clear policy stating that neither Google nor its contractors will ever build “warfare technology.”

On 14 May, more than 800 scholars, academics, and researchers who study, teach about, and develop information technology released a statement in solidarity with the Google employees that calls on the companies to support an international treaty to prohibit autonomous weapon systems and commit not to use the personal data that the company collects for military purposes.

In the Guardian on 16 May, three co-authors of the academic letter highlight key questions that Google faces, such as: “Should it use its state of the art artificial intelligence technologies, its best engineers, its cloud computing services, and the vast personal data that it collects to contribute to programs that advance the development of autonomous weapons? Should it proceed despite moral and ethical opposition by several thousand of its own employees?”

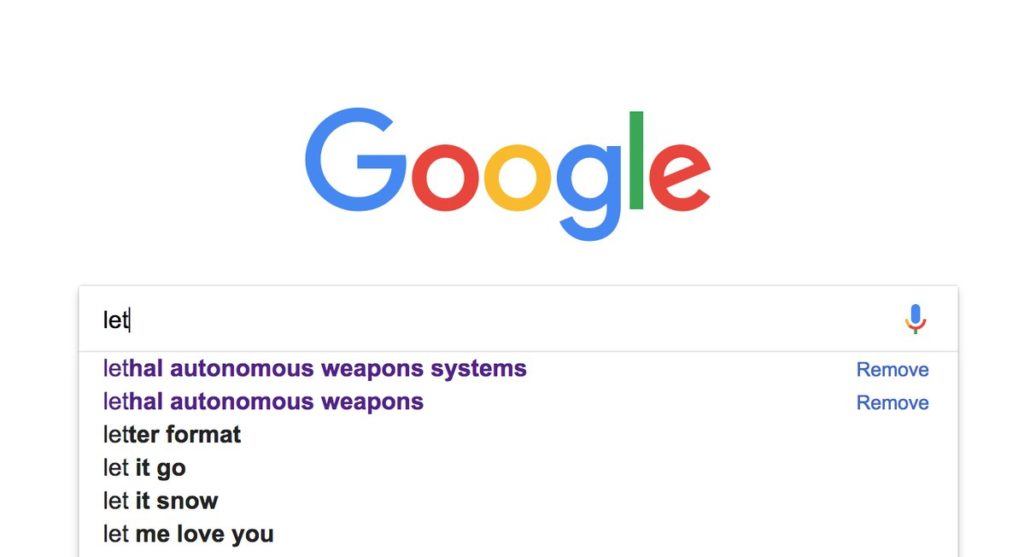

Previously, in a 12 March letter to the heads of Google and Alphabet, the Campaign to Stop Killer Robots recommended the companies adopt “a proactive public policy” by committing to never engage in work aimed at the development and acquisition of fully autonomous weapons systems, also known as lethal autonomous weapons systems, and publicly support the call to for a ban.

All these letters express concern over Google’s involvement in a Department of Defense-funded project to “assist in object recognition on unclassified data” contained in surveillance video footage collected by military drones. According to the Pentagon, Project Maven involves “developing and integrating computer-vision algorithms needed to help military and civilian analysts encumbered by the sheer volume of full-motion video data that DoD collects every day in support of counterinsurgency and counterterrorism operations.” The project, which began last year, seeks to turn the “enormous volume of data available to DoD into actionable intelligence and decision-quality insights at speed.”

Project Maven raises ethical and other questions about the appropriate use of machine learning and artificial intelligence (AI) for military purposes. The Campaign to Stop Killer Robots is concerned that the AI-driven identification of objects could quickly blur or move into AI-driven identification of ‘targets’ as a basis for the direction of lethal force. This could give machines the capacity to make a determination about what is a target, which would be an unacceptably broad use of the technology. That’s why the campaign is working to retain meaningful human control of the critical functions of identifying, selecting and engaging targets.

Google representatives are engaging in a dialogue with the Campaign to Stop Killer Robots and last month provided campaign coordinator Mary Wareham with a statement that says its work on Project Maven is “for non-offensive purposes and using open-source object recognition software available to any Google Cloud customer. The models are based on unclassified data only. The technology is used to flag images for human review and is intended to save lives and save people from having to do highly tedious work.”

In July 2015, high-profile Google employees including research director Peter Norvig, scholar Geoffrey Hinton, and AI chief Jeff Dean co-signed an open letter endorsed by thousands of AI experts that outlined the dangers posed by lethal autonomous weapons systems and called for a new treaty to ban the weapons.

At Google DeepMind, CEO Demis Hassabis, co-founder Mustafa Suleyman and twenty engineers, developers and research scientists also signed the 2015 letter. The following year in a submission to a UK parliamentary committee Google DeepMind stated: “We support a ban by international treaty on lethal autonomous weapons systems that select and locate targets and deploy lethal force against them without meaningful human control. We believe this is the best approach to averting the harmful consequences that would arise from the development and use of such weapons. We recommend the government support all efforts towards such a ban.”

Last month, Amazon’s Jeff Bezos expressed concern at the possible development of fully autonomous weapons, which he described as “genuinely scary,” and proposed a multilateral treaty to regulate them. The Campaign to Stop Killer Robots welcomes these remarks and encourages Amazon to endorse the call for a new treaty to prohibit fully autonomous weapons and pledge not to contribute to the development of these weapons, as Clearpath Robotics and others have done.

Issuing ethical principles means little if a company fails to act on fundamental challenges raised by military applications of autonomy and AI. Responsible companies should take seriously and publicly support the increasing calls for states to urgently negotiate a new treaty to prohibit fully autonomous weapons.

UPDATE: On 7 June, Google released its ethical principles, including a pledge not to develop artificial intelligence for use in weapons. Google staff forwarded the principles on to the campaign coordinator and sent thanks for the input provided. The release followed the news reported by Gizmodo on 1 June that Google has decided to end its participating in theProject Maven contract expires next year.

For more information, see:

- Google employees’ letter (April)

- Academic letter (14 May)

- Campaign to Stop Killer Robots letters to Google and Alphabet (12 March) and Amazon (16 May).